Share

A year ago, in December 2017, we were in the final days of preparation for the UN Secretary-General’s opening of the Centre for Humanitarian Data in The Hague. It was an exciting time filled with promise and exhaustion not unlike bringing a new child into the world. My team and I have since been hard at work delivering on our goal of increasing the use and impact of data in humanitarian response. Here are four takeaways from our first year of operations.

1. Measuring the baseline is critical

The Centre was established based on a three-year business plan. It includes a detailed results framework with four objectives that map to our four workstreams: data services, data policy, data literacy, and network engagement. The framework also has three outcomes, including faster data, stronger partnerships, and increased data use.

We engaged ODI’s Humanitarian Policy Group (HPG) early on to assess whether our results framework was appropriate and robust, and that the outputs and outcomes were measurable. The research, led by Larissa Fast, was critical to creating baseline measurements for all of our indicators.

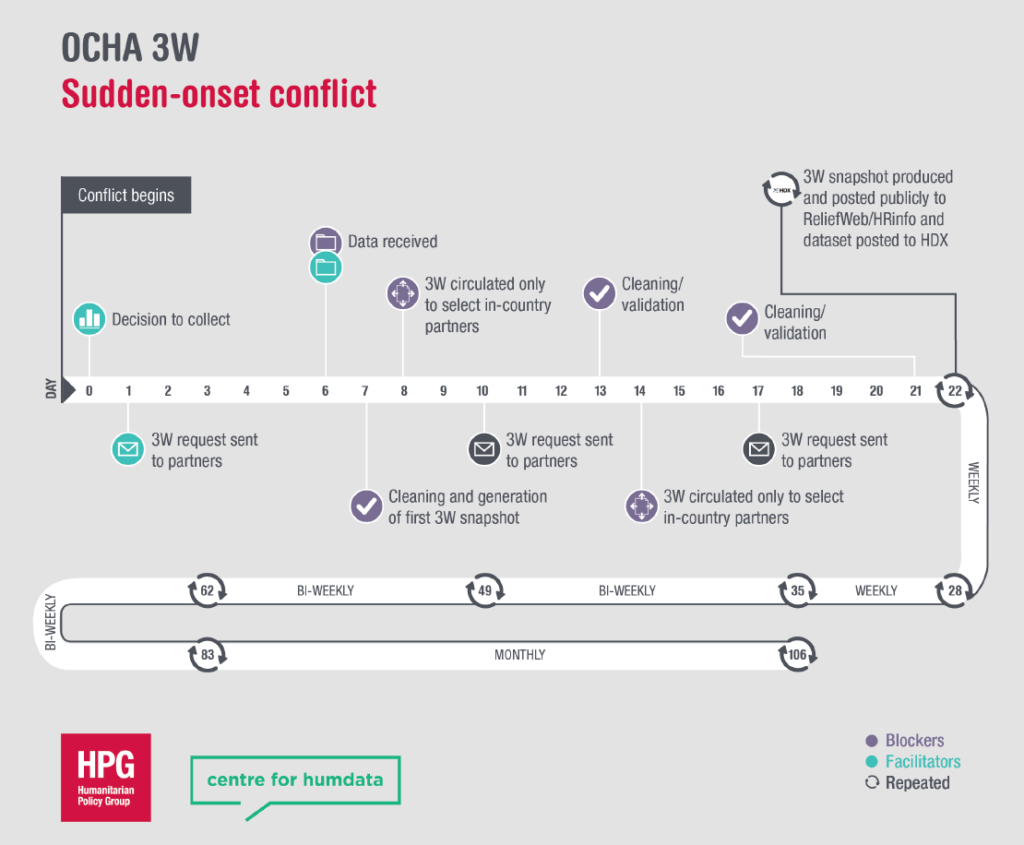

For instance, HPG looked at three crisis-specific datasets and found that it took, on average, 19 days to move from data collection to a published product. They also discovered the ‘data bulge’ on the Humanitarian Data Exchange (HDX) which shows that most data is shared 4-5 weeks into a new crisis. We will be working to get both of these metrics reduced in the coming years. In fact, we just co-authored a blog with WFP titled ‘Getting up to Speed’ that explains how food data is now moving more quickly from the field to HDX.

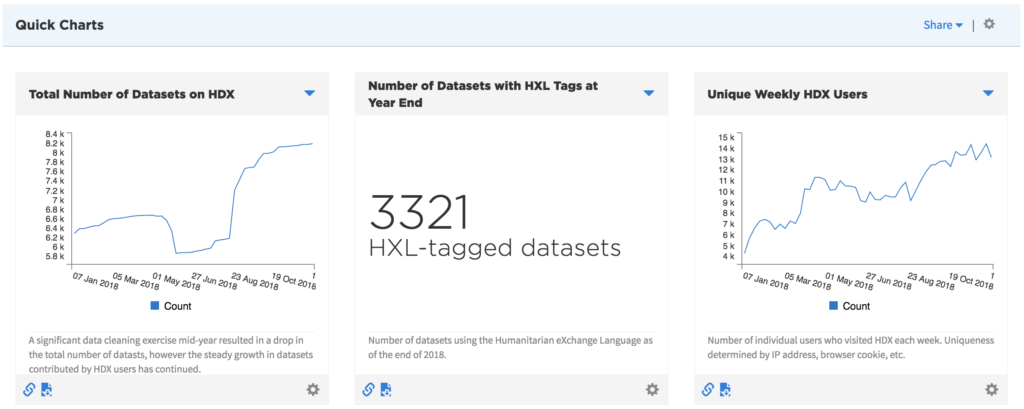

We also made sure we understood levels of engagement with HDX since we see it as a proxy for increased use of data in the sector. Our target was to double the use of HDX in three years and we have done that in the first year. Here are the stats (Dec 2017 through Nov 2018):

- The number of unique users of HDX has grown from 20,632 a month to 53,033 a month since December 2017, a 157% increase.

- 127,968 datasets were downloaded in November, compared with 59,669 dataset downloads a year ago, a 114% increase.

- HXL tags were added to an additional 2,135 datasets in 2018 bringing the total to 3,315 HXLated datasets.

- The number of overall datasets on HDX grew by 38% to 8,176 datasets.

- Even with the growth in users, the proportion coming from field countries remained steady at 35%. We will continue to engage OCHA field offices to make HDX an essential part of the data workflow.

An independent evaluation of the Centre will take place in late 2019 to assess whether we are making sufficient progress to justify continuing beyond the initial three year period. So far, we are headed in the right direction.

2. It’s about bandwidth, not budget

Prior to managing the Centre, I managed HDX. The Centre was an outcome of our work on HDX as we realized that a technical platform was not going to be enough to solve the challenges we face in using data better. HDX became part of the Centre’s data services workstream. Over the course of 2018, we stood up three new workstreams for data policy, data literacy, and partnerships. We also held our inaugural Data Fellows Programme in June and July in The Hague.

I found the biggest barrier to getting the Centre up to full speed was my own capacity to manage more people and activities. Previously I had always ensured that the HDX team had what it needed to grow the platform (i.e. data scientists, developers and designers) but I didn’t simultaneously grow the management team.

This became a liability in the first part of 2018. It came to a head during the Data Fellows Programme when I needed to connect the Fellows with OCHA staff and partners to develop their solutions. Given all of the other work I had to focus on, I ended up being a blocker in a way that I hadn’t foreseen. When Stuart Campo and I talked to the Fellows about what to improve and possibly expand for year two, one of them said to remember ‘it’s about bandwidth, not budget’ something that has since resonated with me. I am working to address this through the recent hiring of a programme manager and team coordinator and through better delegation.

Saying that, I am thrilled with what the Fellows were able to accomplish in such a short amount time. Manu Singh’s incredible work on creating a predictive financing model is now being improved upon and expanded to more countries. And Haoyun Su’s work on data storytelling resulted in an amazing piece, ‘Displaced in South Sudan: A journey of 1,000 kilometers,’ that connects people to the experience of one family as it seeks safety during a civil war. (Read Stuart’s reflections on the Fellows programme here).

3. The model works

The Centre’s business model has two defining aspects: a geographically distributed team and implementation support from UNOPS. We are part of OCHA’s Information Management Branch and have a small number of OCHA staff based in The Hague and Geneva. We work with UNOPS to hire expert consultants who work in locations around the world.

We have field teams in Dakar and Nairobi, both of which are located within the OCHA regional offices. In Jakarta we are co-located and share resources with Global Pulse. And UNHCR hosts one of our team members in their offices at UN City in Copenhagen. Our global footprint allows us to expand our reach, stay field focused, and build trusted relationships in the places where our partners are located.

The Centre receives foundational funding from the Netherlands and the city of The Hague. Throughout the year, we have attracted additional support. We are working with the Education Above All Foundation to increase access to data about education under attack. And we will soon initiate a project with ECHO focused on the management of sensitive data and improving data responsibility.

To keep track of our progress, we use the Objectives and Key Results (OKRs) process popularized by Google. We set quarterly targets for what each team and individual members will deliver, and those targets are made available (through Trello) with everyone on the team in a transparent and non-hierarchical way.

4. ‘Transformation’ no more

Lastly, I noticed over the past year that I stopped using the word ‘transformation.’ It’s not that I don’t believe that things can change. It’s just that the use of transformation in our sector seems more about the promise of change, especially when it comes to ‘digital transformation’ and ‘transformative innovation.’ It is something that is said at the start of a process to get people on board, or bought in. I am sure I said it when we created HDX in 2014 and when we were getting agreement for the Centre in 2016, but years into the work of delivering on better data, it feels like hype.

This could be cynicism creeping in after 15 years of working in the humanitarian system. I remember the momentum around the 2011 Transformative Agenda which promised to improve the effectiveness of humanitarian response. This was preceded by Humanitarian Reform in 2005 and followed by the Agenda for Humanity in 2016. These shared agendas are important for getting alignment across partners on what needs to improve but the sense of accomplishment often comes from getting collective agreement rather than achieving collective results and impact.

One reason for this is that the humanitarian sector lacks a framework for measuring progress and outcomes, along the lines of the Sustainable Development Goals. I am encouraged by the work of Alice Obrecht and colleagues at ALNAP to investigate how to measure humanitarian performance. Their report ‘Making it Count’ assessed 71 existing indicators for measuring progress related to the Agenda for Humanity. A majority of the indicators are problematic, either due to unclear methodologies or because the data is partial or unavailable. But as the report concludes, a collective monitoring of progress is possible if the sector considers it to be a priority. This finding is supported in OCHA’s new report ‘Staying the Course: Delivering on the Ambition of the World Humanitarian Summit.’

The Centre stands ready to support this work, and indeed, it aligns with our own goal of increasing the use and impact of data in the sector. If the humanitarian system is able to develop a collective results framework with baseline measurements, we might avoid yet another ‘transformative’ process a few years from now. Instead we could just make adjustments to the framework and keep turning the screw on achieving a more efficient, effective, and principled response to people in need.

What’s Next

We have big plans for 2019. Here are a few things to look out for in the coming year:

- We will roll out the ‘crisis data grid’ on HDX to help users know what core data is available and missing for priority humanitarian crises. Take a look at the initial examples for Yemen and Sudan.

- We will publish the OCHA Data Responsibility Guidelines to help staff and partners navigate the technical and ethical aspects of working with sensitive data.

- We will create an automated process for organizations to detect high-risk data before it is exposed publicly on HDX.

- We will develop a new workstream for predictive analytics that supports anticipatory financing.

- We will initiate work on the two-year roadmap for our data literacy workstream, following research that is currently underway with Dalberg Design.

Feedback

Let us know how we are doing and what you would like to see more of in 2019. You can reach us at centrehumdata@un.org or on Twitter @humdata. Thank you for being part of our community!

If you haven’t been able to follow our work over the past year, take a look at our timeline of top highlights for 2018.